AI Governance: Policies and Regulations for AI Development

3 min read

14 Jul 2024

As artificial intelligence (AI) continues to advance rapidly, there is a growing need for robust governance frameworks to ensure responsible and ethical AI development and deployment. Policies and regulations play a crucial role in guiding the development, use, and impact of AI technologies, addressing concerns related to ethics, safety, privacy, and accountability.

Ethical Principles: Ethical principles provide a foundation for AI governance, guiding developers, policymakers, and users in the responsible use of AI technologies. Principles such as fairness, transparency, accountability, and privacy help ensure that AI systems are developed and deployed in ways that respect human rights, dignity, and values. Ethical frameworks, such as the IEEE Ethically Aligned Design and the Montreal Declaration for Responsible AI, provide guidance for integrating ethical considerations into AI development processes.

Safety and Reliability: Ensuring the safety and reliability of AI systems is paramount for AI governance. Regulatory frameworks, such as the European Union's AI Act and the United States' AI Regulatory Principles, aim to mitigate risks associated with AI technologies, including system failures, biases, and unintended consequences. Safety standards, testing protocols, and certification processes help assess and mitigate risks associated with AI systems' design, development, and deployment.

Transparency and Explainability: Transparency and explainability are essential for building trust and accountability in AI systems. Policies and regulations require developers to provide transparency about AI system capabilities, limitations, and decision-making processes. Additionally, regulations, such as the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA), mandate that AI systems provide explanations for their decisions, particularly in high-stakes applications such as healthcare and finance.

Data Privacy and Security: Protecting data privacy and security is a fundamental aspect of AI governance. Regulations, such as the GDPR, the Health Insurance Portability and Accountability Act (HIPAA), and the Personal Information Protection and Electronic Documents Act (PIPEDA), establish requirements for collecting, processing, and storing personal data used in AI systems. Additionally, cybersecurity standards and best practices help safeguard AI systems from data breaches, cyber attacks, and unauthorized access.

Bias and Fairness: Addressing bias and promoting fairness in AI systems is critical for AI governance. Regulations aim to prevent discriminatory outcomes and ensure equitable access to AI technologies and opportunities. Algorithmic impact assessments, fairness metrics, and bias mitigation techniques help identify and mitigate biases in AI systems, promoting fairness and inclusivity in their design and deployment.

Accountability and Oversight: Establishing mechanisms for accountability and oversight is essential for AI governance. Regulatory bodies, such as the Federal Trade Commission (FTC) and the European Union Agency for Cybersecurity (ENISA), oversee compliance with AI regulations and investigate incidents of non-compliance or harm. Additionally, liability frameworks and insurance mechanisms help allocate responsibility and provide recourse for individuals affected by AI-related harms or accidents.

International Cooperation: Given the global nature of AI technologies, international cooperation and collaboration are essential for effective AI governance. Multilateral initiatives, such as the OECD AI Principles and the G20 AI Principles, facilitate dialogue and cooperation among countries to develop common frameworks and standards for AI governance. Bilateral agreements and treaties further promote information sharing, capacity building, and coordination on AI-related issues across borders.

Stakeholder Engagement: Engaging stakeholders, including governments, industry, academia, civil society, and the public, is crucial for inclusive and effective AI governance. Consultative processes, public consultations, and stakeholder forums provide opportunities for diverse voices to contribute to AI policy development and implementation. By fostering collaboration and dialogue, stakeholder engagement promotes transparency, accountability, and legitimacy in AI governance processes.

Conclusion: AI governance is essential for ensuring that AI technologies are developed and deployed responsibly, ethically, and in the public interest. Policies and regulations address a wide range of issues, including ethics, safety, transparency, privacy, bias, accountability, and international cooperation. By fostering a supportive regulatory environment and engaging stakeholders, governments can promote innovation while mitigating risks and ensuring that AI benefits society.

More Articles

The Algorithmic Revolution: AI Meets Blockchain for Enhanced Security

6 min read | 16 Aug 2024

From Diamonds to Diplomas: How Blockchain Can Verify Anything

4 min read | 15 Aug 2024

The Future is Decentralized: How Blockchain is Shaping a New World Order

4 min read | 23 Aug 2024

Unlocking the Potential of Data: How Blockchain Empowers Individuals

4 min read | 22 Aug 2024

More Articles

LG Suitcase TV: A Portable Entertainment Center for Travelers

2 min read | 15 Apr 2024

DJI Avata: The Future of Personal Transportation Takes Flight

4 min read | 14 Apr 2024

Philips Signe Floor Lamp: Illuminating Your Home with Style

2 min read | 13 Apr 2024

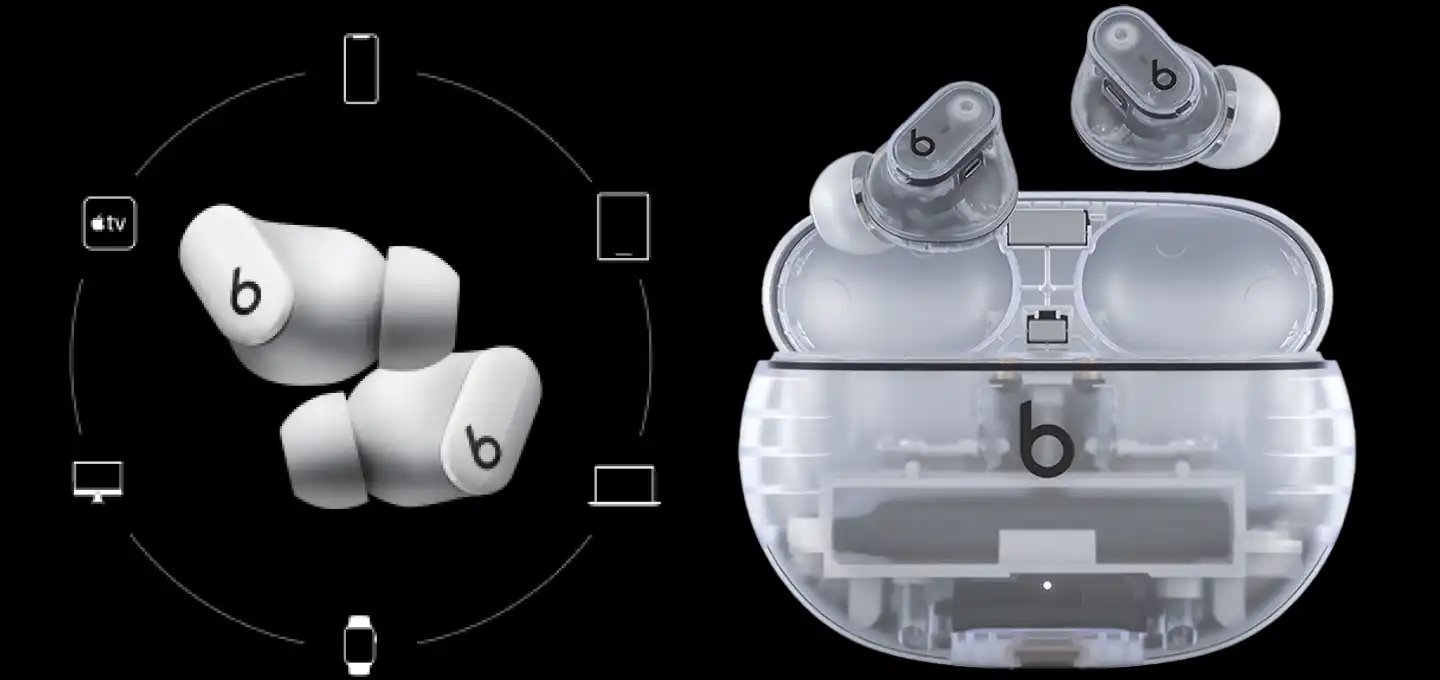

Beats Studio Buds: The Ultimate Wireless Earbuds for Music Lovers

2 min read | 12 Apr 2024